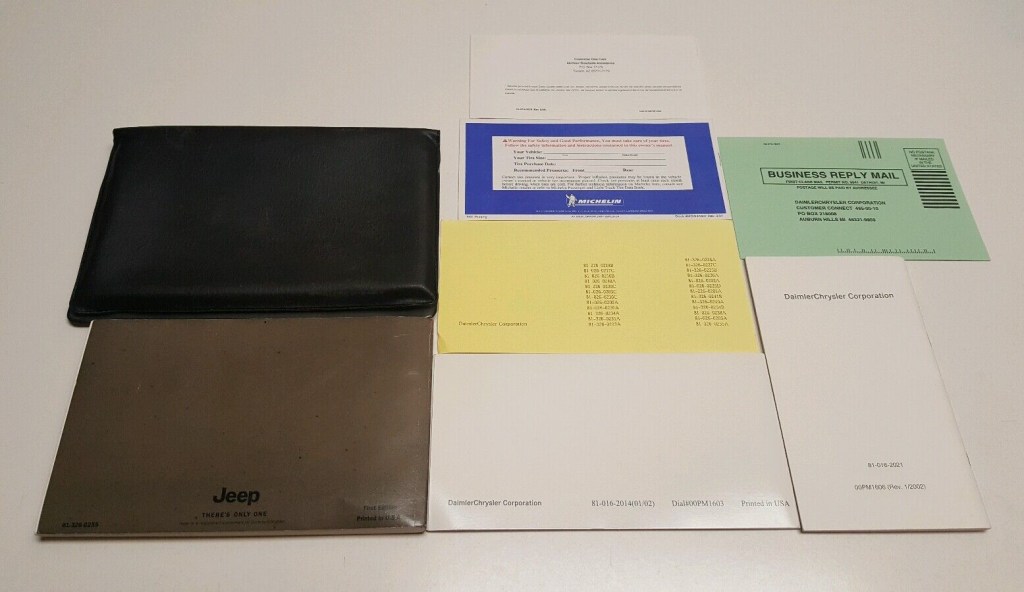

Complete Your 02 Jeep Wrangler Experience With Our Essential Owners Manual – Download Now!

Jun

18th

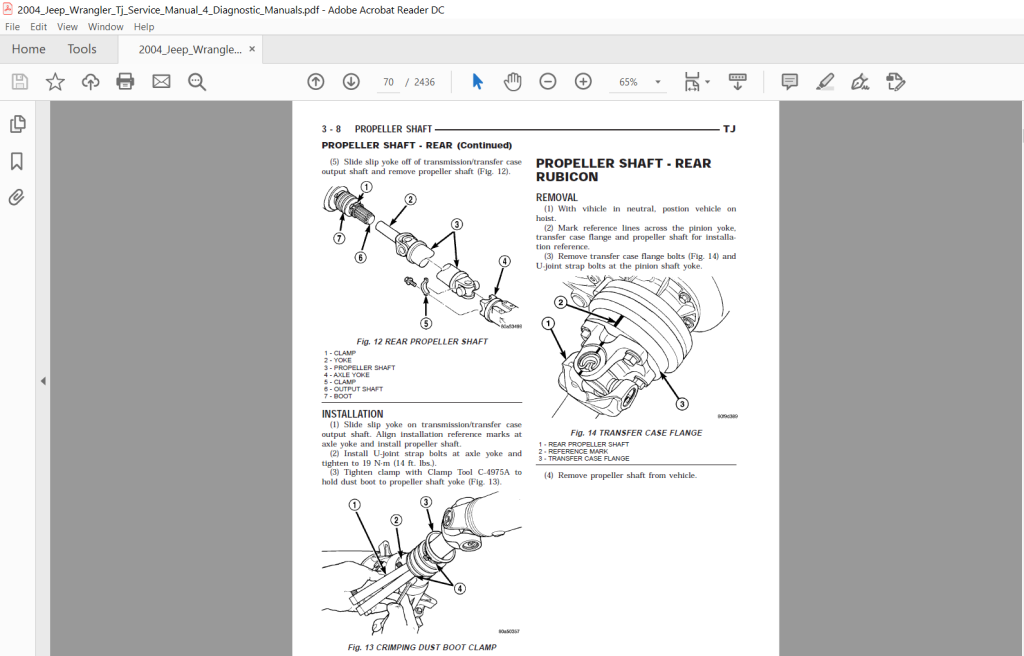

Discovering the 02 Jeep Wrangler Owners Manual 02 Jeep Wrangler Owners Manual is a comprehensive guide that provides all the information and instructions necessary for owning, operating, and maintaining a Jeep Wrangler of this model year. This manual is designed to help owners understand the features and capabilities of their Jeep, as well as provide instructions for proper care and…